TL;DR (Click to expand)

This month (August 2025), hybrid reasoning LLM development splits into two camps rallied around the same flag. DeepSeek says "give models multiple personalities!" and Nous says "just teach them better!". These techniques aim to solve the same problem: making models that can reason AND follow instructions.

DeepSeek V3.1 and Hermes 4 simultaneously claimed victory over the reasoning-generality tradeoff within the same week. In effect, this is where making your model smarter at math doesn't make it dumber when writing haikus. Their philosophies are diametrically opposed though the approaches are very compatible.

One says we need to plant split consciousness in models; the other says that the brain's fine and that we're just terrible teachers.

Both are probably right. Both are being absolutely bloody insufferable about it. This is MacGyver with a butter knife vs a Swiss Army knife for dummies.

The Great Schism

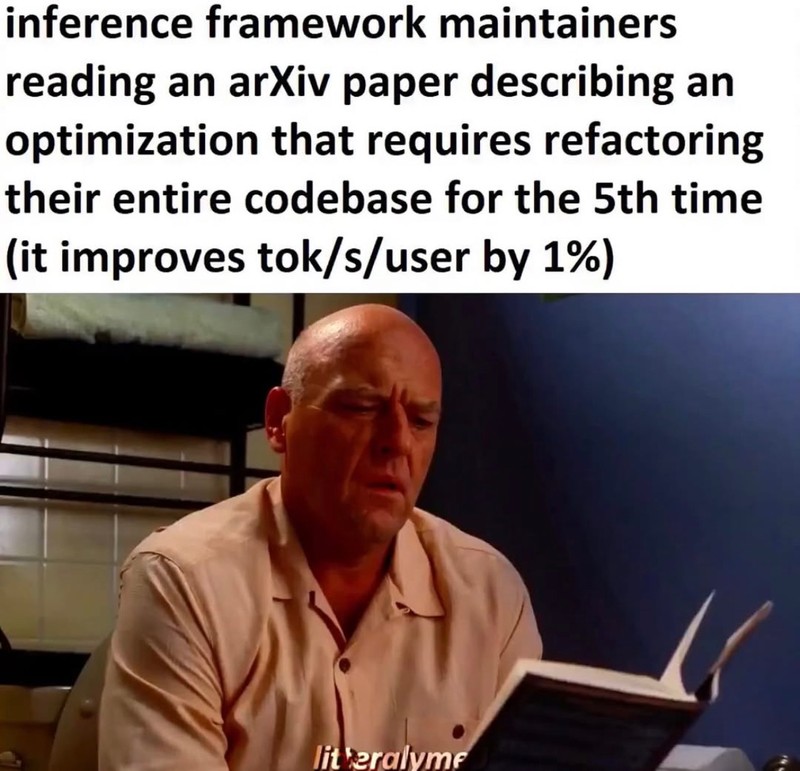

We've reached the point where AI development has almost literally split into religious factions. No more "my inference framework is 1% better than yours" bickering (see below). Actual philosophical debate about what intelligence even means.

DeepSeek preach that different thoughts need different architecture. Their 3.1 gives models literal multiple personalities: a chatty mode for your "write me a limerick about databases" requests, and a brooding philosopher mode for "solve ."

TL;DR - they made DID for language models.

Nous insist the models already know how to think, we're just teaching them wrong. Their proposed solution is to only grade the final answer, not the working. Let the model ramble internally without penalty. It's a pedagogical revolution achieved through selective ignorance.

The irony? These methods work and produce comparable results. Let's see how each camp pulls off their particular brand of magic.

MacGyver with a butter knife

Nous attempts to make something brilliant from almost nothing. For Hermes 4, they pulled off some interesting training tricks and completely rethought loss functions. Standard training punishes everything - the model's rambling thoughts, false starts and internal monologue.

They only apply loss to assistant outputs, letting the model observe its own reasoning chains without penalty. The model can watch itself think terrible thoughts and learn from them, but never get punished for the exploration.

The length-control fine-tuning is especially clever. Their 14B model kept hitting context limits during evaluation. They taught it to recognise its own limitations rather than pruning responses - this is achieved by injecting synthetic </think> tokens at precise moments during training, but here's the crucial bit: gradients only flow to the termination decision itself. The reasoning chain stays untouched. The model learns when to stop without forgetting how to reason entirely.

Their graph-based data synthesis pipeline, DataForge, maintains logical consistency through knowledge graphs, meaning the multiplication problems it generates actually follow mathematical laws rather than just being statistically plausible token soup. They use specialised judges in a paranoid quality control process to avoid degrading model fidelity and evaluate responses against type-specific rubrics. Failed samples get revised a certain number of times and then binned if not up to scratch.

The magnum opus of DataForge's judge system is that the assessor model always has different weights from the model generating answers. This prevents the deeply embarrassing scenario where the assessor just approves its own output. This adversarial relationship between the assessor and generator (seemingly) improves quality enough that they can produce a high quality corpus to train models with.

Lastly, their First-Fit Decreasing packing at >99.9% batch utilisation and use of Flex Attention for sample isolation produces a real achievement in that they fine-tuned a 405B model in 38 hours, the 70B in 20 hours, and the 14B in 10 hours. That suggests they've optimised the socks off their pipeline, which they deserve huge kudos for this alone.

Swiss Army knife for dummies

On the flipside, DeepSeek's architectural solution is the opposite - a Swiss Army knife with an expert for everything. 3.1's architecture is what happens when you decide the solution to confusion is controlled schizophrenia.

They've built dual pathways into their model. Think mode and Non-Think mode. When it needs to reason, it shifts into a completely different computational pattern with modified attention mechanisms and processing routes. It's not just prompting tricks or hidden tokens. The model physically processes information differently based on which personality is driving.

On a technical level, the thinking mode uses sparse attention patterns optimised for tracking long reasoning chains. Non-thinking mode uses dense attention for general tasks. They effectively combined two different models that share the same weights but use them completely differently. It's architecturally elegant and much more efficient, but operationally a nightmare. Each cognitive task gets its own dedicated blade, which is great if you pick the right one - but embarrassing when you try to open a wine bottle with the nail file.

The efficiency facet is particularly compelling. Why burn expensive compute on "what's the weather like?" when you can route that through the cheap pathway? Reasoning tasks get the full computational brunt, chitchat and AI companionship get the cheap seats.

The orthogonality is the real win though. You can optimise the reasoning pathway without simultaneously making the model worse at writing poetry. Each mode can be refined independently. No more "we fixed maths but now it speaks exclusively in LaTeX" commonly seen in fine-tuning traditional models.

But here's where it gets properly annoying: the gating network that decides which mode to use is another potential failure point. It's trying to classify cognitive complexity on the fly. When it miscategorises, you either waste compute on trivial tasks or get half-baked responses to difficult queries. DeepSeek's solution to the reasoning problem introduces a meta-reasoning problem: teaching a neural network to recognise when reasoning is needed.

The implementation details are surprisingly brutal. Mode switching isn't free. There's computational overhead in the gating network where all these new smarts reside. Context needs to be reformatted, and the gating network can often make questionable decisions about what counts as "complex", as it's aiming to be computationally cheap. Automation ≠ accuracy here.

Convergence

This divide is almost comically pure. DeepSeek says intelligence needs specialised architecture. Nous says intelligence needs better education. One builds cathedrals, the other reforms the curriculum.

What nobody will admit is that someone will combine these approaches within six months, if not sooner. Dual pathways trained with selective loss functions. Gating networks that learn from reasoning chains without penalty. The feedback loop this creates is where things get interesting. The offspring of this union will either be genuinely brilliant or completely unhinged. Probably both in equal measures.

The joke here is that everyone's arguing about implementation details while missing what they're actually admitting: current models are fundamentally broken. DeepSeek's solution is to build around it with semi-intelligent routing and novel, more efficient architecture. Nous's solution is to train through it with gradients. Neither actually fixes the core problem: That models don't really understand what they're doing.

Conclusion

DeepSeek wants a future where models have clearly labelled tools and experts. Nous wants monolithic models that just figure things out. One leads to increasingly complex architectures with experts for every cognitive task. The other leads to massive generalist models that hopefully learn to organise themselves.

Both will probably coexist because the industry loves nothing more than unnecessary complexity. Enterprise customers will demand mode-switching for "control." Researchers will insist on purity. Start-ups will ship whatever works or is cheaper.

But the real winner will be whoever realises that the bottleneck isn't reasoning or architecture - it's that we're still teaching silicon to mimic meat computers. Current approaches force models through transformer architectures designed to simulate human attention mechanisms. Why should models think like us? Digital systems have perfect memory, infinite patience, and can process millions of tokens simultaneously.

Here's the revolution hiding in plain sight: What happens when a model trained with Nous's approach, watching its own uncensored reasoning, becomes the teacher for a gating network? The system starts learning from its own successes AND failures without human intervention. Each reasoning chain that works reinforces certain routing patterns. Each failure teaches the gating network what doesn't need deep thinking. This produces a model that gets smarter just by running. Not through fine-tuning, not through RLHF, but through a feedback loop between their own reasoning and routing - a cognitive flywheel that spins faster with every query.

This schism isn't real. These aren't competing philosophies - they're two halves of a metabolism. And once someone figures that out, we'll have models that don't just think. They'll evolve.